Read more topics of Computer Network.

Q1. What is congestion in the computer network ?

Ans. Congestion is the situation in which too many packets present in the network causes packet delay and loss that degrades performance of the network. The network transport layer handles the responsibility of congestion. The network layer have to determine what to do with the excess packets, because it directly experiences it.Q2. What is congestion control? What are the causes of congestion?

Ans. Congestion is an important issue that can arise in packet switched network. Congestion is a situation in communication networks in which too many packets are present in a part of the subnet, performance degrades.Congestion in a network may occur when the load on the network (i.e. the number of packets sent to the network) is greater than the capacity of the network (i.e. the number of packets a network can handle).

In other words, when too much traffic is offered, congestion sets-in and performance degrades sharply.

Causing of Congestion :

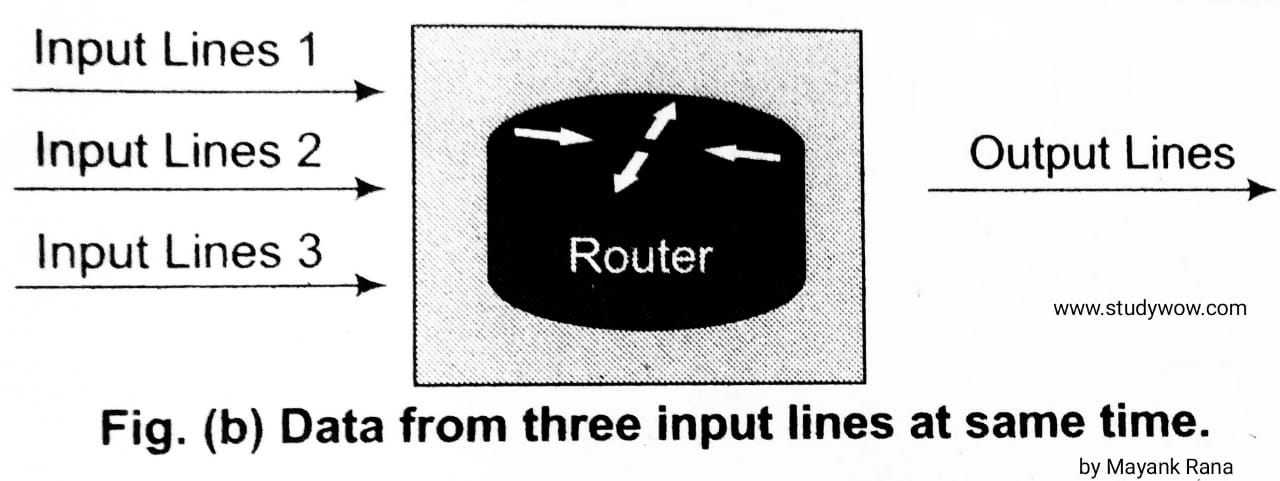

The various causes of congestion in a subnet are as follows:1. The input traffic rate exceeds the capacity of the output lines. If suddenly, a stream of packet start arriving on three or four input lines and all need the same output line. In this case a queue will be built up.

If there is insufficient memory to hold all the packets, the packet will be lost. Increasing the memory to unlimited size does not solve the problem. This is because, by the time packets reach front of the queue, they have already timed out (as they waited the queue).

When timer goes off source transmits duplicate packet that are also added to the queue. Thus, same packets are added again and again, increasing the load all the way to the destination.

2. The routers are too slow to perform bookkeeping tasks (queuing buffers, updating tables etc).

3. The routers' buffer is too limited.

4. Congestion in a subnet can occur if the processors are slow. Slow speed CPU at routers will perform the routine tasks (such as queuing buffers, updating table etc.) slowly. As a result of this, queues are built-up even though there is excess line capacity.

5. Congestion is also caused by slow links. This problem will be solved when high speed links are used.

But it is not always the case. Sometimes increase in link bandwidth can further deteriorate the congestion problem as higher speed links may make the network more unbalanced.

Congestion can make itself worse. If a router" does not have free buffers, it start ignoring/discarding the newly arriving packets.

When these packets are discarded. the sender may retransmit them after the timer goes off. Such packets are transmitted by the sender again and again until the source gets the acknowledgement of these packets.

Therefore multiple transmission of packets will force the congestion to take place at the sending end.

Q3. What are the differences between flow control and congestion control?

Ans. Differences between Flow Control and Congestion Control| Basis | Flow Control | Congestion Control |

|---|---|---|

| Operator | Done by server machine or sender machine. | Done by router. |

| Buffering | Process buffering. | Does not process. |

| Bandwidth | It cannot block the bandwidth medium. | It block the bandwidth medium. |

| Packet lost | Packet is lost between sender and server. | Other users packet is lost. |

| Performance | Affect less on network performance. | Affects the network performance. |

Q4. Describe the congestion control algorithm commonly used?

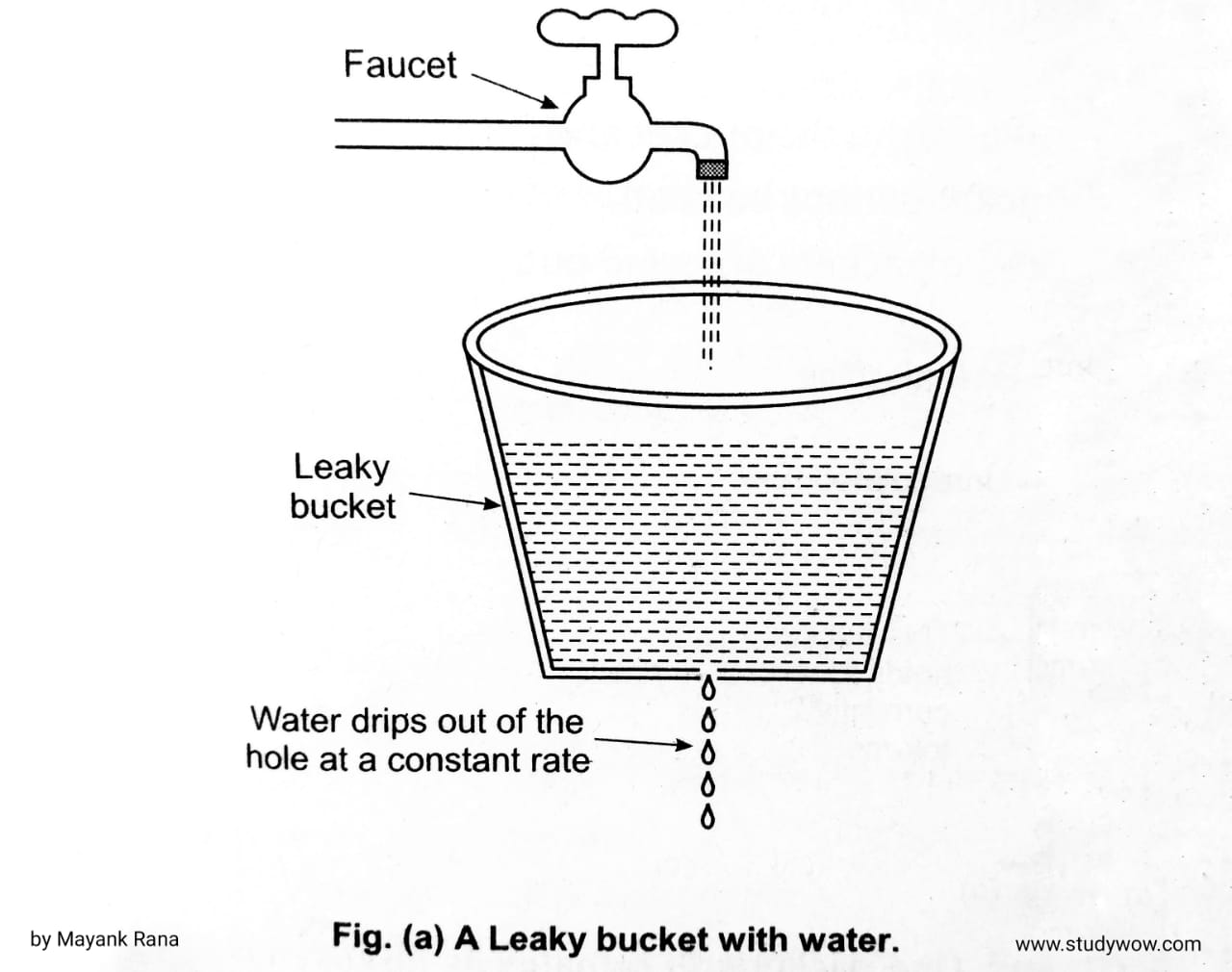

Ans. There are two types of congestion control algorithm :1. Leaky Bucket Algorithm :

The leaky bucket implementation is used to control the rate at which traffic is sent to the network. Leaky bucket implementation is same as bucket having a hole at the bottom such as,• Imagine a bucket with a small hole in the bottom, as illustrated in Fig. (a). No matter the rate at

which water enters the bucket, the outflow is at a constant rate,r, when there is any water in the bucket and zero when the bucket is empty.

Also, once the bucket is full, any additional water entering it spills over the sides and is lost (i.e. does not appear in the output under the hole).

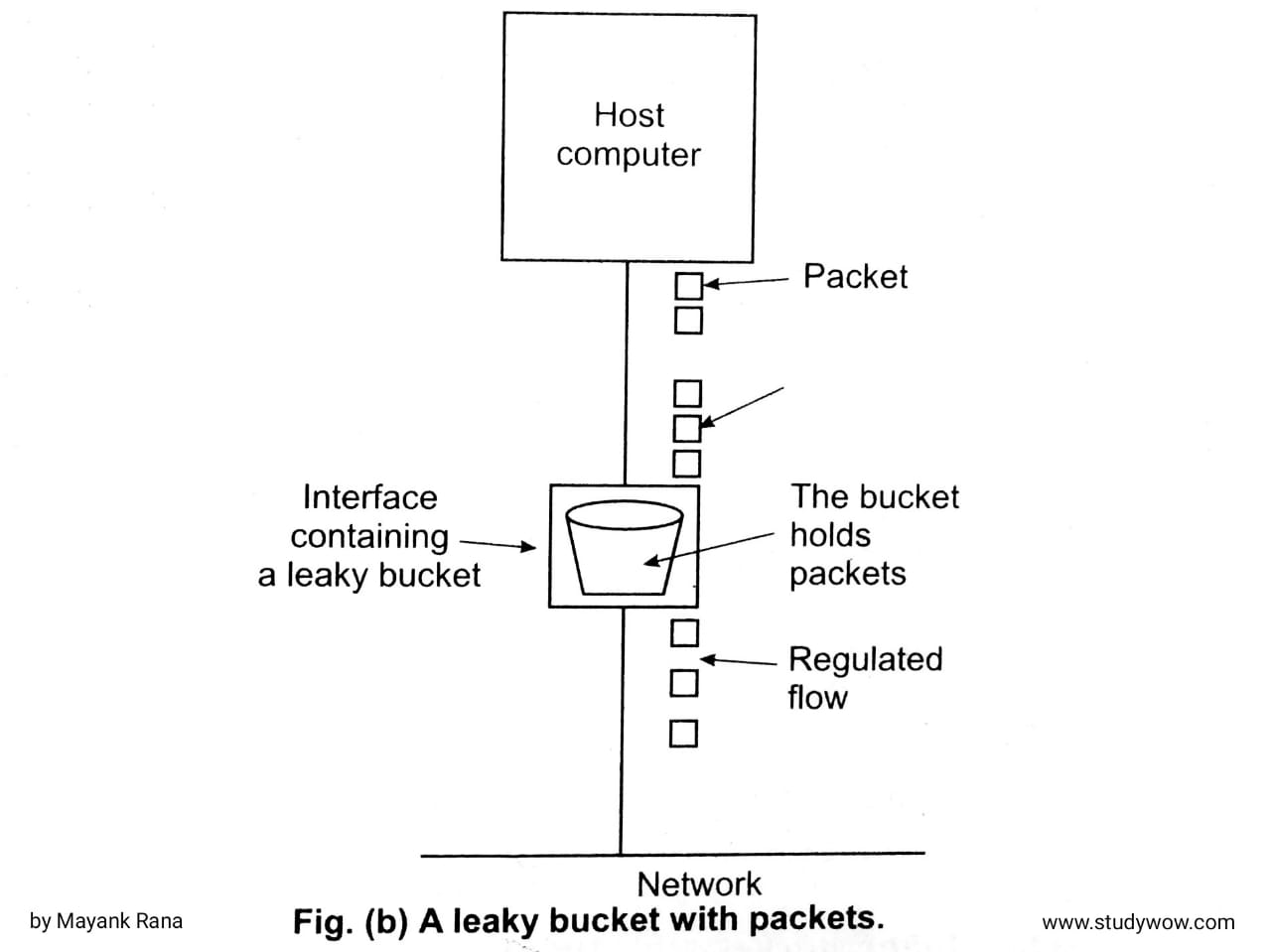

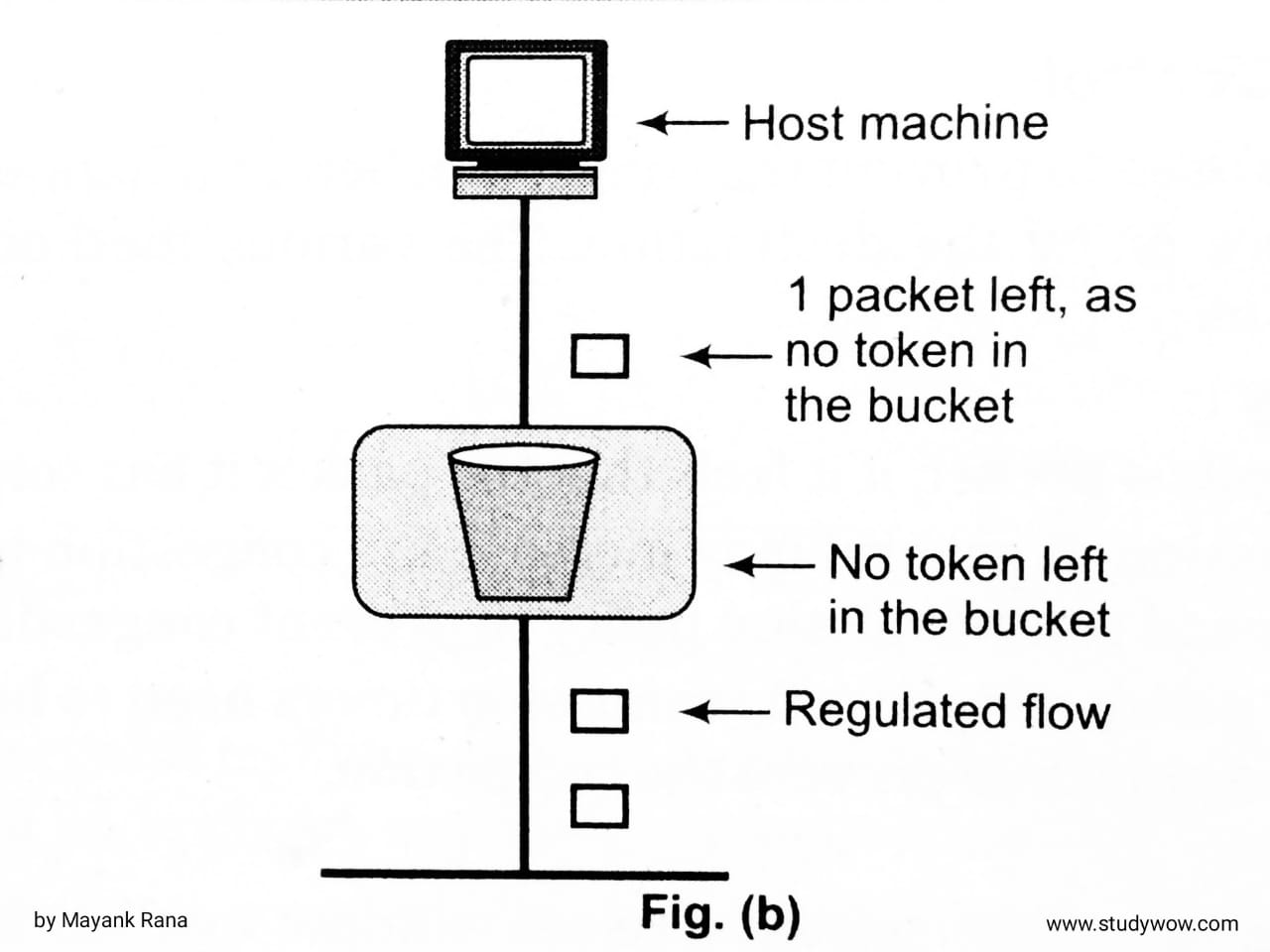

• The same idea can be applied to packets, as shown in Fig (b). Conceptually, each host is connected to the network by an interface containing a leaky bucket, that is, a finite internal queue. If a packet arrives at the queue when it is full, the packet is discarded.

• In other words, if one or more processes within the host try to send a packet when the maximum number is already queued, the new packet is unceremoniously discarded.

• The host is allowed to put one packet per clock tick onto the network. Again, this can be enforced by the interface card. This mechanism turns an uneven flow of packets from the user processes inside the host into an even flow of packets onto the network, smoothing out bursts and greatly reducing the chances of congestion.

The following steps are performed:

Step 1. When the host has to send a packet, the packet is thrown into the bucket.

Step 2. The bucket leaks at a constant rate, meaning the network interface transmits packets at a constant rate.

Step 3. Bursty traffic is converted to a uniform traffic by the leaky bucket.

Step 4. In practice the bucket is a finite queue that outputs at a finite rate.

Step 5. If the traffic consists of variable length packets, the fixed output rate must be based on the number of bytes or bits. The following is an algorithm for variable-length packets:

(i) Initialise a counter to n at the tick of the clock.

(ii) It n is greater than the size of the packet, send the packet and decrement the counter by the packet size. Repeat this step until n is smaller than the packet size.

(iii) Reset the counter and go to step 1.

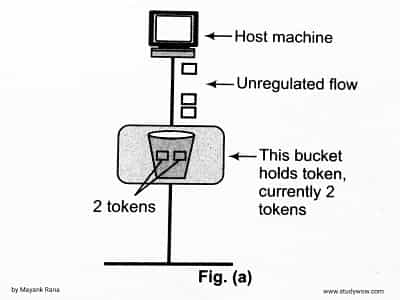

2. Token Bucket Algorithm:

As we know that, leaky bucket algorithm enforces a rigid pattern at the output stream, irrespective of the pattern of the input. For many applications, it is better to allow the output to speed up somewhat when a larger bursts arrives than to loose the data.The token bucket algorithm allows idle hosts to accumulate credit for the future in the form of tokens. For each tick of the clock, the system sends n tokens to the bucket. The system removes one token for every cell (or byte) of data sent. The token bucket can easily be implemented with a counter. The token is initialised to zero.

Each time a token is added, the counter is incremented by 1. Each time a unit of data is sent, the counter is decremented by 1. When the counter is zero the host cannot send data.

This algorithm follows the following steps:

Step 1. In regular intervals tokens are thrown into the bucket.

Step 2. The bucket has a maximum capacity.

Step 3. If there is a ready packet, a token is removed from the bucket and the packet can be send.

Step 4. If there is no token in the bucket, the packet cannot be send.

In Fig. (a), token bucket holding two tokens, before packets are send out.

When token bucket after two packets are send. One packet still remains as no token is left. As shown in Fig(b).

Now, if the host wants to send bursty data, it can consume all 10,000 tokens at once for sending 10,000 cells or bytes. Thus, a host can send bursty data as long as bucket is not empty. As shown in Fig(c).

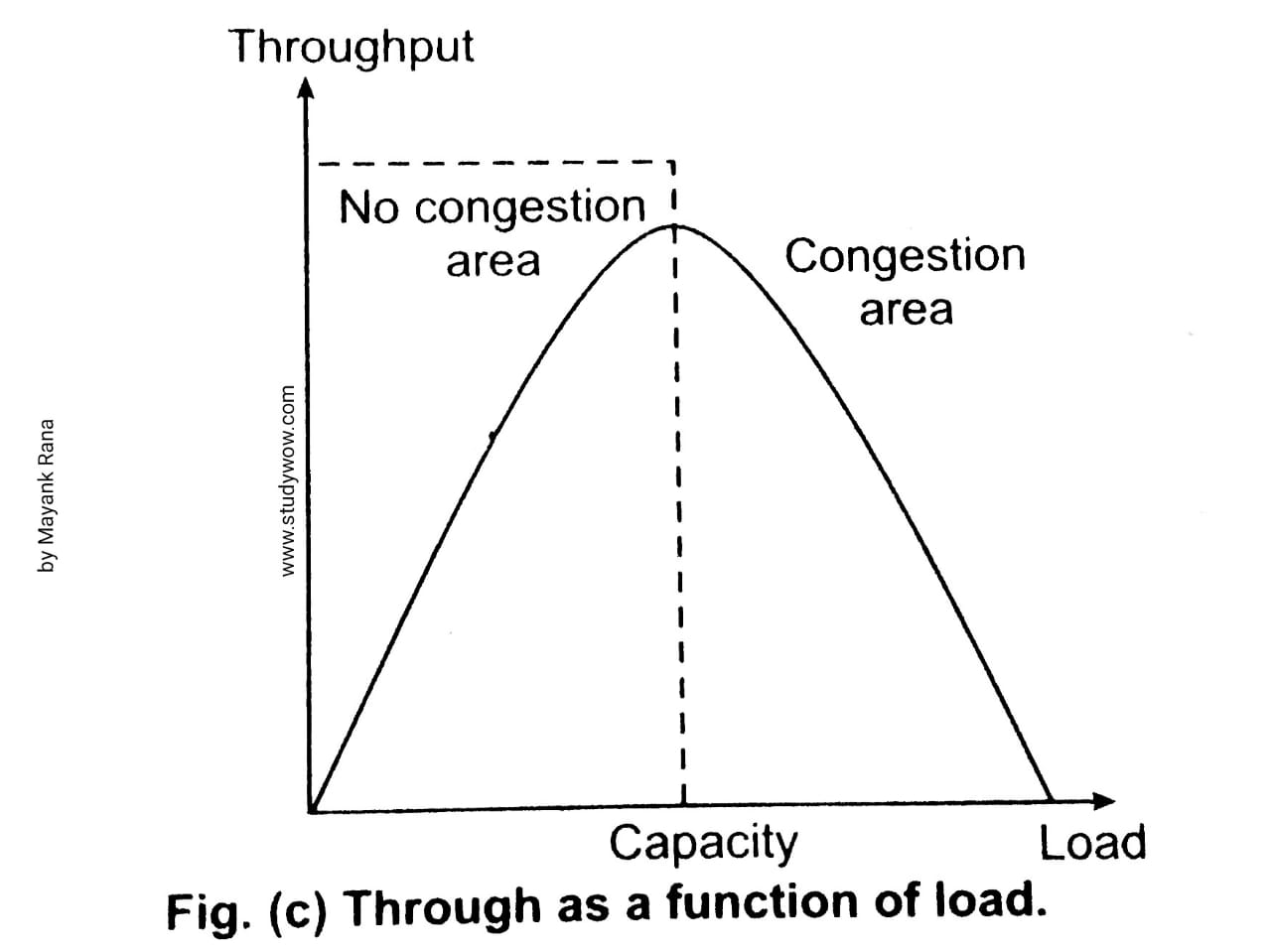

Q5. Explain the concept of congestion control and also measure the performance on the network?

Ans. Congestion is the situation, will be occurs, when too many packets are present in the subnet (as a part of a larget network). Congestion degrades the performance of the network. When the number of packets dumped into the subnet by the hosts is within its carrying capacity, they all are delivered and the number delivered is proportional to the number sent, except for a few that are afflicted with transmission occurs. However, as the traffic increases too far, the routers are no longer able to handle and they begin losing the packets. At very high traffic, performance collapses completely and almost no packets are delivered so far.

congestion control algorithm refers to techniques and mechanisms that can either present the congestion, before it happens in the network, or remove the congestion, after it has happened.

Congestion control involves two factors that measure the performance of the network.

1. Delay :

It can be measure, when the load is much less than the capacity of the network, the delay will be minimum and composed of propagation delay and processing delay. Note that the delay becomes infinite when the load is greater than the capacity.2. Throughput :

It can define in a network as the number of packets passing through the network in a unit of time. When the load is below the capacity of the network, the throughput increases. Note that, when the load is below the capacity of the network, the throughput increases proportionally with the node.

We expect the throughput to remain constant, even after the load reaches the capacity. The reason for that is the discarding of packets by the routers. Whenever the load exceeds the capacity, the queues become full and then the routers have to discard some packets. The performance of the network is shown in given Fig. (b) and (c), on the basic of delay and throughput.

[Topics= 1.Congestion. 2.Congestion Control and its causes. 3.Difference between Congestion Control and Flow Control. 4.Congestion Control Algorithm. 5.How to measure performance of network.]

[Topics= 1.Congestion. 2.Congestion Control and its causes. 3.Difference between Congestion Control and Flow Control. 4.Congestion Control Algorithm. 5.How to measure performance of network.]

Join us on Facebook and Twitter to get the latest study material. You can also ask us any questions.

Facebook = @allbcaweb

(click on it or search "allbcaweb" on facebook)

Twitter = @allbcaweb

(click on it or search "allbcaweb" on Twitter)

Email= allbca.com@gmail.com

Send us your query anytime about computer network notes!

External Links:

Congestion Control (link).

[Topics= 1.Congestion. 2.Congestion Control and its causes. 3.Difference between Congestion Control and Flow Control. 4.Congestion Control Algorithm. 5.How to measure performance of network.]

No comments:

Write comment